Published on August 10, 2023 by Saurabh Rathi

The financial markets are highly regulated, with a strong focus on compliance and risk management. Continued monitoring of data and reporting to regulators are top priorities. Given that large financial organisations deal with vast amounts of data, they need to ensure data accuracy and integrity to minimise risk. Moreover, time is of the essence when providing financial services, and a single data error could snowball rapidly in downstream processes, leading to financial and reputational loss.

Data management aims to create a single reference source for all critical business data, reducing error and redundancy in business processes. Data management is critical for research, trading, risk management and accounting, enabling data providers to integrate, cleanse and connect data from multiple vendors and make it available across the enterprise. Quickly accessing such structured data and stitching it to other information is critical to the success of any business in the financial markets.

The valuation and movement of an index can influence the financial markets and investors. Index providers are required to meet the highest standards of data quality and calculation accuracy. However, creating and maintaining an index is a difficult and time-consuming process that calls for both industry expertise and data analysis. To simplify and accelerate management of indices and data and their time to market, the sector has been implementing new technologies.

Index providers of all sizes need future-proof, flexible and agile data management systems that can handle rapid change.

Key challenges index providers face in data management

Index providers are under increased pressure as index-based investing gains popularity due to its transparency and lower cost. Data management is a major challenge for index providers because the sector is so operationally demanding and has a number of types of indices, each with its own special set of calculations.

Depending on how an index is calculated, a number of factors need to be considered, such as the liquidity of constituent components and the relative weightage of each component. Management of such complex indices takes several days and countless man-hours to achieve the high level of accuracy required.

The challenges around data for index providers are as follows:

-

Data consolidation from internal and external sources

-

Maintaining superior data quality and eliminating duplication

-

Examining large volumes of structured and non-structured data, including securities trading data, market data, newswires, social media and annual reports

-

In-detail reviews and index rebalancing

-

Creating new indices and reassessing current ones through a series of restructured tasks such as universe selection, benchmarking against historical data and review

Data management can present challenges for every index provider tasked with managing large amounts of highly sensitive data that could include customer data and key proprietary reference data from multiple perspectives. Where quality and accuracy of data matter from a financial perspective, it also becomes important for regulatory compliance.

Importance of data quality

Data quality is a must for index providers. Asset managers and ETF issuers that use index providers as a critical service are under increasing pressure to safeguard and demonstrate their operational resilience. This means index providers come under heightened scrutiny – as service providers, rather than just as data providers. Clients want more insight, transparency and comfort about the index providers’ operational processes. Consequently, they request information on areas of operation not currently covered by regulation. Examples include how they create new indices, their rebalancing and reconstitution cycles, how they deal with errors and even how effective controls of their systems and technology are. Increased data quality can, therefore, provide transparency to such financial institutions.

Growth in popularity of ESG investing is more about consumer protection, including delivering on a desired outcome beyond investment income. While there are regulatory parameters applicable to index providers producing ESG indices, regulation in this area is still relatively underdeveloped, so data quality is under constant review.

Maintaining data quality is, therefore, imperative, as index providers have to provide transparency and comfort to an increasing range of stakeholders – from regulators to asset managers, and even to individual retail clients. Audits of such data quality have to be versatile and innovative enough to ensure senior management and those charged with governance at the index provider, regulators and client can be confident that the index provider is complying with regulations and contractual obligations, following through on methodologies and managing operational risk appropriately.

Highlighted below are seven pillars that indicate quality data:

Data testing and comprehensive quality assurances address these seven pillars of data quality. Data researchers have to be consistent with their approach/methodology to extract data from primary resources in a timely manner. Such raw data has to be standardised in the database with validity and accurately so stakeholders can use it to make informed decisions. Each index provider requires technology and domain experts to define testing objectives and apply the sector’s best quality practices to ensure the index products are error-free.

Data integration is also crucial because it provides a way to access and interpret data and information stored in various silos. A single solution can be used to quickly access all the data and increase understanding of what needs to be improved instead of using multiple systems to pool the pertinent data.

Data-quality testing and data integration

As stated above, index providers have to be comprehensive in their approach to standardising the quality of raw data provided by vendors. They need to test the quality of data and make changes to their approach, as required. The index provider conducts deep quality tests on all major reference datasets such as free float market cap, industry classification and shares outstanding along with pricing services and model portfolios to understand the current data standards and the optimisation requirements of the vendor data and internal research.

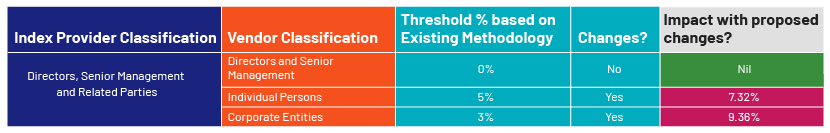

The example below helps us understand data-quality testing and integration with the existing data of the index provider. We consider the attribute of free-float research, which includes parameters such as shareholder classification, timeliness of collection of data points on the shareholdings and the difference in methodology in understanding free-float research. Here we consider the underlying data used by a specific index provider and compare this to vendor data based on published methodology. There are more parameters that could be used for testing, but for this scenario, we limit these to three.

The exhibit above illustrates how an index provider and data vendor have different methodologies, based on shareholder classification and thresholds. The total methodology will be affected by the threshold restrictions for individuals and corporate entities by around 7.32% and 9.36%, respectively. These details are essential for the index provider to decide whether to align its procedure with the data provider’s or not. On the other hand, the index provider could request specific datasets from the data source to meet its needs. This helps the index provider make an informed decision using vendor data.

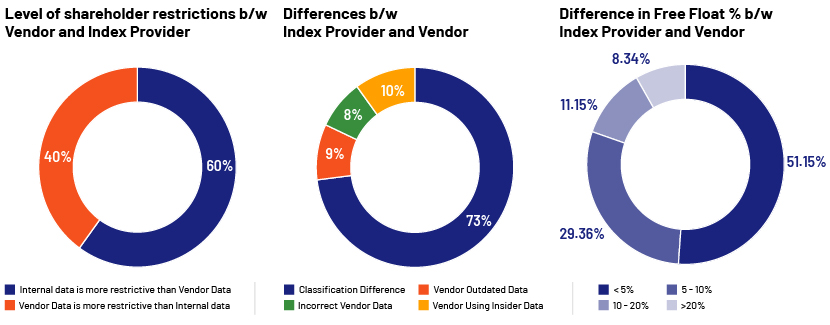

In the charts above, the levels of limitation of a sample of the underlying index data are compared, as is the variance in free-float values between the vendor and the index provider. The first chart suggests an index provider using shareholder-restricting data by contrasting vendor data with in-house analysis. The second chart shows the reasons for the vendor's data and internal data diverging. It notifies the index provider that classification is to blame for 73% of the discrepancy, with vendor use of insider information accounting for 10%. The third graphic shows that the classification difference for 51.15% of the evaluated owners is less than 5%, followed by 29.36% of the shareholders with a classification difference of 5-10%. Overall, this helps the index provider choose which part of the vendor data's quality to research, and if data integration is likely, what timeframe should be set for such automation.

After such data testing is completed, the index provider obtains insights on whether it can streamline the data into its systems directly or if it requires additional support to review the data. The results could be further impacted by the decisions the index provider takes with the proposed changes, and it needs to assess the impact on the overall methodology it has been following in the past and, thus, the impact of such changes, if accepted, on the overall business of the institution. Such reviews are evaluated to reduce manual effort for the index provider. This enables having a simplified methodology while maintaining a larger universe based on the above example. However, the scope and reason for data integration may vary by vendor.

Regulatory landscape

In addition to data integration and quality, regulatory requirements place a high priority on data management. For years, the idea of customising an index meant splitting up an existing broad-based index. Benchmarks can lead to conflict of interest, as evidenced by controversies involving the manipulation of benchmarks such as the London Interbank Offered Rate (LIBOR) and the Euro Interbank Offered Rate (EURIBOR) and claims that other benchmarks may also have been manipulated. To guarantee the precision and integrity of benchmarks that are provided and measured, a harmonised framework needed to be put in place.

The LIBOR scandal of 2012 prompted the European Commission to propose a regulation on indices used as benchmarks in financial instruments and financial contracts. The resulting Benchmark Regulation [Regulation (EU) 2016/1011] became effective on 30 June 2016 and was implemented in January 2018. The parties that administer an index, use an index (supervised entities) and provide data for an index (contributors) are all subject to the EU Benchmark Regulation and the UK Benchmark Regulation. It also holds true for index providers based outside of the EU, the UK, or either of those regions if their indices are used as benchmarks inside of either or both of those regions. Increased transparency and stringent oversight of the provision of benchmarks in the EU and/or the UK aim to protect consumers and investors exposed to benchmarks.

The SEC started working on proposing regulatory compliance and frameworks to index providers in 2022. It has expressed concern about the potential for index providers to front-run other market participants given the amount of information they have on corporate events and price action. The SEC has also been worried about the amount of discretion index providers use when crafting indices and wants to see more transparency around that process. Finally, the SEC wants to make sure that information on changes to an index is communicated clearly to market participants. If an index provider aligns with the IOSCO Principles, it may already have policies and procedures in place to deal with these issues, but that would be a voluntary arrangement.

The SEC proposed the Vendor Oversight Rule in late 2022, believing it would apply to certain relationships with index providers. This supports the SEC's ongoing focus on index providers and their interactions with advisors, including the SEC staff's inquiries into the rationale behind some index providers' eligibility for exemption from the requirement that investment advisors be registered.

How Acuity Knowledge Partners can help

With financial datasets fragmented across so many systems, data testing and rapid delivery of high-quality data at scale have become critical issues. We provide seamless testing with the production data necessary to evaluate, cleanse, mask test-case data and make that data continuously available for repeated testing cycles in any environment. Accuracy of this data is critical for data-driven business decisions, and identifying outliers early in the data-ingestion process can significantly reduce complexity downstream.

We offer a wide range of services to help streamline your index maintenance workflows. Our experience in managing indices for leading exchanges and index providers around the world enables us to stay ahead of the competition by evaluating and validating methodologies, data and calculation systems. Additionally, our reference data research and data governance services help you manage your data assets to ensure they are accurate and of the highest quality.

With deep knowledge of indexing, we aid organisations in seamlessly migrating existing processes from old technologies to the latest ones, helping you improve risk management and unlock the power of automation.

As one of the leading service providers in the index domain with over 15 years of experience, we support clients in identifying opportunities and building unique index solutions that provide differentiated exposure to the target in index reconciliation data research, data quality, data governance and analytics.

Sources:

-

https://www.thetradenews.com/lseg-snaps-up-xtf-in-latest-data-acquisition/

-

https://www.collibra.com/us/en/blog/the-importance-of-data-quality-in-financial-services

-

https://www.esma.europa.eu/esmas-activities/investors-and-issuers/benchmark-administrators

What's your view?

About the Author

Has been with Acuity Knowledge Partners for the past 11 years, supporting a global benchmark index provider in reference data analysis and stock research. Leading the team responsible for index research, index data processing, UAT and contributes to projects related to equity index research.

Like the way we think?

Next time we post something new, we'll send it to your inbox