Published on June 29, 2021 by Sreeram Ramesh

Introduction

During the golden age of economic expansion in the 1950s, many fields of science and engineering witnessed rapid growth. In particular, two fields of mathematical and physical sciences –mathematical programming and Monte Carlo methods – witnessed exponential growth in both theory and practical applications. Along with the academic progress in natural sciences, a new field of science and technology – computer science – began to emerge. Since then, the platforms and tools used to analyse data have undergone a number of changes.

Today, Python has emerged as one of the most popular programming languages, fuelled by its open-source nature and a rich history of contributors from the fields of scientific computing, mathematics and engineering. Due to its clear and intuitive syntax, Python is perhaps the most user-friendly language compared with traditional languages such as Java and C++.

For young data scientists and data science aspirants, here is our quick guide that covers the libraries, functions, models and integrated development environments (IDEs) most frequently used in Python.

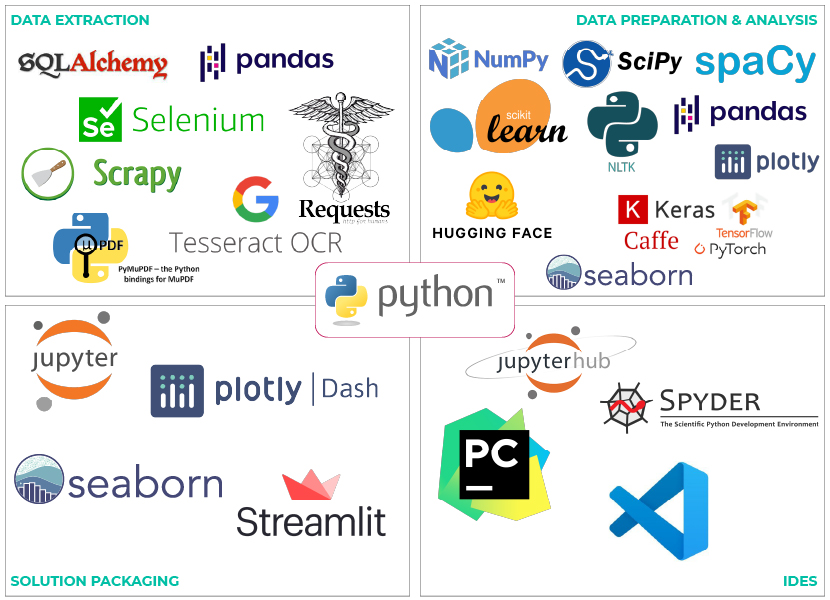

Data science solutions typically have four elements:

1. Data extraction

Data sources used most often in the finance domain and Python libraries and functions used to extract data from them include the following:

Databases

sqlalchemy: to connect to relational databases and extract data

pymongo: to perform MongoDB extraction. MongoDB is an NoSQL DB

Flat files

pandas: to import, export, and transform data from files, including .csv, .xlsx and json files

open(), read(), write(), close(): : to execute create, read, update, delete (CRUD) actions

Web scraping

Requests: to make HTTP requests such as GET and POST

BeautifulSoup: to web scrape data from most web pages

Selenium: to collect data from complex web pages

Scrapy: to acquire data in a scalable manner

PDF reports

Traditional libraries: PyPDF2, pdfminer, tika, PyMuPDF and fitz

OCR libraries: textract and pytesseract

2. Data preparation and analysis

Data preparation and analysis methods depend on the type of data:

Structured data: Tabular data

Unstructured data: Text, audio and images

Data preparation

pandas: to perform data transformations, aggregations, validations and cleansing

numpy: to carry out fast numerical dataset operations

nltk: to perform a wider range of NLP pre-processing functionality

Data analysis

SciPy: to perform different mathematical computations

scikit-learn: the most commonly used library for predictive analytics

nltk: to perform NLP analysis, including sentiment scoring

spacy: to classify entity names through entity recognition modelling

textblob and vaderSentiment: to perform sentiment analysis on textual data

keras: to build and transform deep-learning networks and datasets

pytorch: to build advanced and flexible deep-learning models

tensorflow: another advanced framework to build deep-learning models

word2vec, glove, BERT and USE: pre-trained embedded language models to build advanced NLP

huggingface: open source repository of many different kinds of NLP pre-trained models

matplotlib, seaborn, and plotly: to visualise data through graphs

3. Solution packaging

jupyter notebook: to rapidly code, visualise and present data applications and reports

powerbiclient: to visualise data insights and predictions

dash: to build graphical and user interface web page components

4. IDE

PyCharm, Visual Studio Code, jupyterlab and Spyder are the IDEs most commonly used by Python users. These IDEs come with a feature to easily perform version-control operations.

Conclusion

Open-source programmers around the world are constantly improvising Python and open-source libraries and tools, making the language really powerful, easy to use and agile. With leading financial institutions and corporations embracing hybrid cloud strategies to manage work-flow during the pandemic and beyond, Python has become an integral catalyst for transforming global businesses.

Bibliography

Tags:

What's your view?

About the Author

Sreeram Ramesh has over six years of experience in solving business problems using machine learning (ML), deep learning and statistical techniques. He has worked with multiple financial service providers and Fortune 500 companies, providing robust data science solutions. At Acuity Knowledge Partners, Sreeram creates artificial intelligence (AI)/ML engines to analyse textual data, and generate insights and proprietary scores. He holds a Bachelor of Engineering degree in Mechanical Engineering from Birla Institute of Technology and Science (BITS), Pilani.

Comments

12-Jul-2021 06:04:17 am

Like the way we think?

Next time we post something new, we'll send it to your inbox